Your shopping cart is empty!

TinyML on Arduino using Edge Impulse

- Idris Zainal Abidin

- 02 Sep 2021

- 1624

Introduction

Edge Impulse is a platform that allows us to build projects related to machine learning on microcontrollers. This tutorial will be divided into a few parts, and we will update accordingly.

Part 1 - Link the board to Edge Impulse

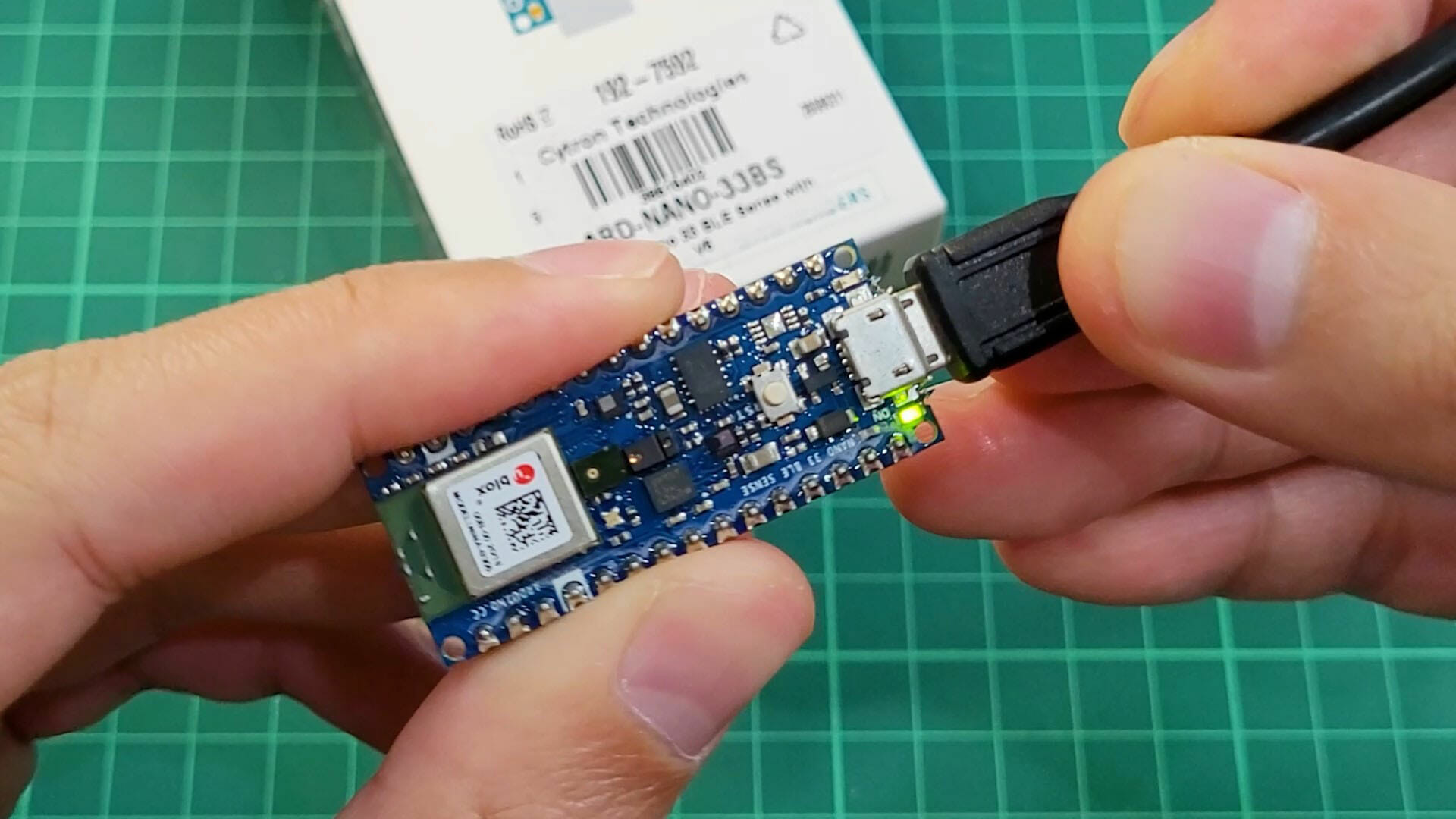

Hardware Preparation

This is the list of items used in the video.

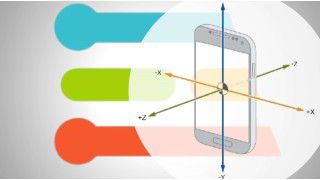

Part 2 - Data acquisition

*Note: I'm having a problem where the microphone on the Arduino board doesn't seem to record after more than 2 attempts. If you are having the same problem, try to reset the Arduino board, and reconnecting to the Edge Impulse.

Part 3 – Train ML Model

In part 3, we will train the ML model based on the data that we collected before. Later we will deploy on the Arduino board.

Part 4 – Control LED color with voice

In part 4, we will program the Arduino board based on the trained ML model. Then we will edit the program to control the LED color using voice.

Sample Program

This is the sample program for Arduino to control LED color using voice.

Thank You

References:

- Edge Impulse with the Nano 33 BLE Sense

- Edge Impulse: Arduino Nano 33 BLE Sense

- Introduction to Embedded Machine Learning

Thanks for reading this tutorial. If you have any technical inquiries, please post at Cytron Technical Forum.

"Please be reminded, this tutorial is prepared for you to try and learn.

You are encouraged to improve the code for a better application."

Related Products

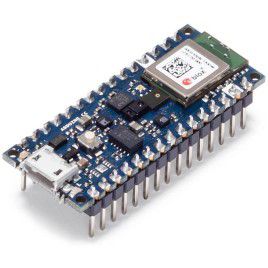

Arduino Nano 33 BLE Sense with Headers

Discontinued

International

International Singapore

Singapore Malaysia

Malaysia Thailand

Thailand Vietnam

Vietnam