Your shopping cart is empty!

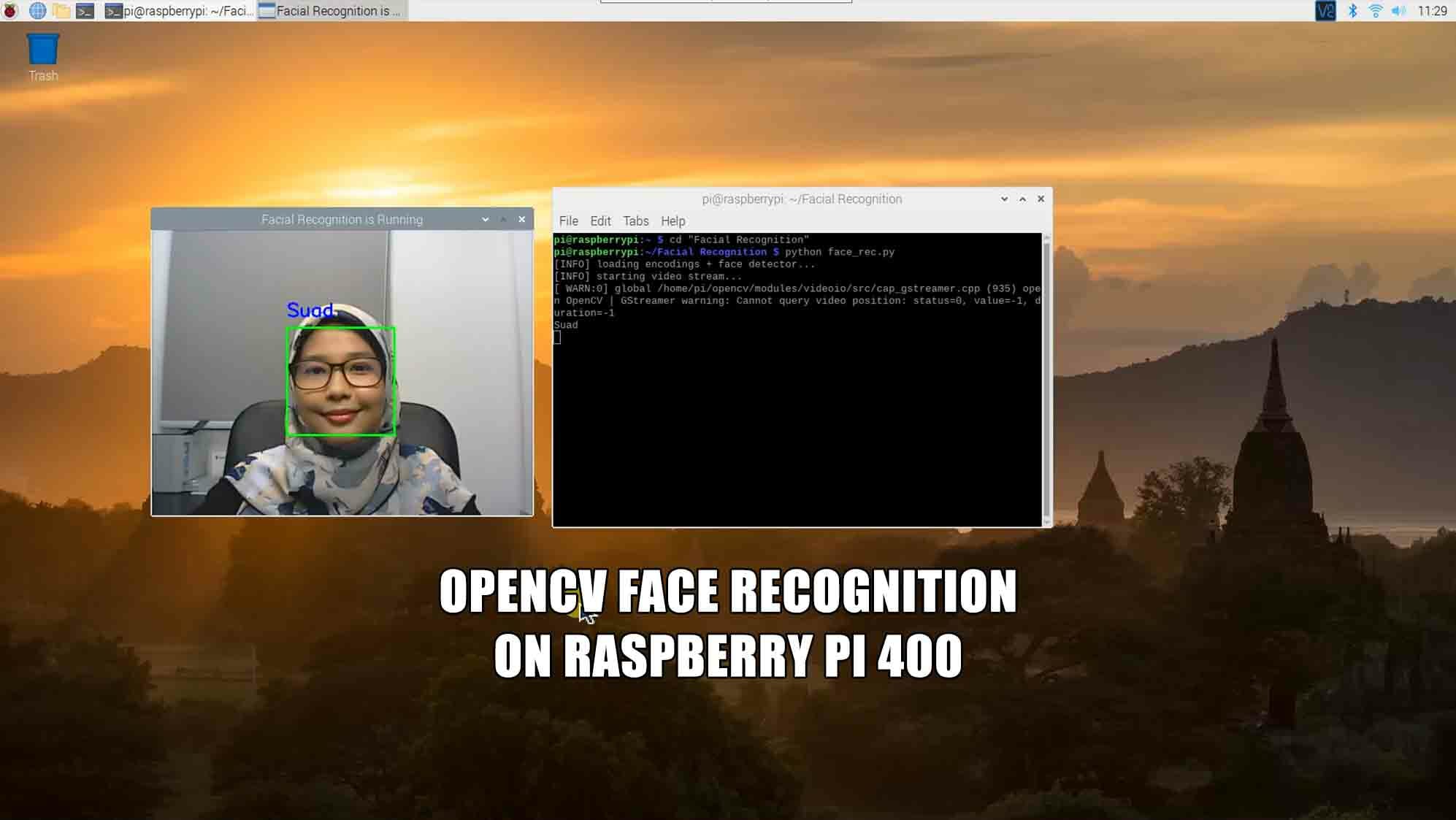

Face Recognition Using OpenCV on Raspberry Pi 400

Introduction

A face recognition system is a technology that able to match human faces from digital images or video frames to facial databases. Although humans can recognize faces without much effort, facial recognition is a challenging pattern recognition problem in computing. The face recognition system seeks to identify the human face, which is three-dimensional and changes appearance with facial lighting and expression, based on its two-dimensional image. To complete this computational task, the face recognition system performs four steps. The first face detection is used to segment the face from the background of the image. In the second step, segmented facial images are adjusted to take into account facial poses, image sizes, and photographic properties, such as lighting and grayscale. The purpose of the alignment process is to enable proper localization of facial features in the third step, extraction of facial features. Features such as eyes, nose, and mouth are shown and measured in pictures to represent the face. The vector features a robust face then, in the fourth step, is matched to the face database.

Video

This video shows how I use OpenCV to make a simple face recognition system on Raspberry Pi 400.

Hardware Preparation

This is the list of items used in the video.

Sample Program

This is a python3 sample program for OpenCV Face Recognition using Raspberry Pi. You can use it with Thonny Python IDE.

References:

Thanks for reading this tutorial. If you have any technical inquiries, please post at Cytron Technical Forum.

"Please be reminded, this tutorial is prepared for you to try and learn.

You are encouraged to improve the code for a better application."

International

International Singapore

Singapore Malaysia

Malaysia Thailand

Thailand Vietnam

Vietnam